Chapter Five: Assignments

Iterating on a design

I frequently assign students a project focused on rapid iteration. In this type of assignment, a student creates something (a presentation, a series of wireframes, a service blueprint) and then iterates on that artifact over, and over, and over. For example, in a class focused on digital product design, I assign students the task of creating a redesign for an existing digital product, such as a banking application. I’ll instruct them to:

Using the existing banking application:

- Identify ten problems in the current design.

- Redesign the application to fix those problems

- User test your redesign with at least 10 people

- Hold a group critique with your classmates

- Repeat this process.

In the first iteration, students inevitably produce an ugly, ill-structured, confusing redesign. I then have them user test this iteration with real people. We combine the result of user testing with an in-class critique, and then students highlight main areas of improvement. Then, their next task is to work through the problem again, refining what they’ve made; and then, to user test again, and so-on. In an 8 week quarter, we work through 7 iterations, each following the same model: make, test & critique, refine.

Each stage in the process comes with its own challenges.

Create a new design

When the student makes the first few iterations of their design, they encounter two main problems. The first is that the problem itself—such as redesigning a banking application—is hard. The problems they have been assigned are things that working professionals really work on; they are advanced problem. Students aren’t yet prepared to do a good job, and that means that their early iterations will be poor. They will make bad design decisions, creating products that are hard to use or overly complicated. This is discouraging for students because they can see that the results are poor—their taste is stronger than their design abilities.

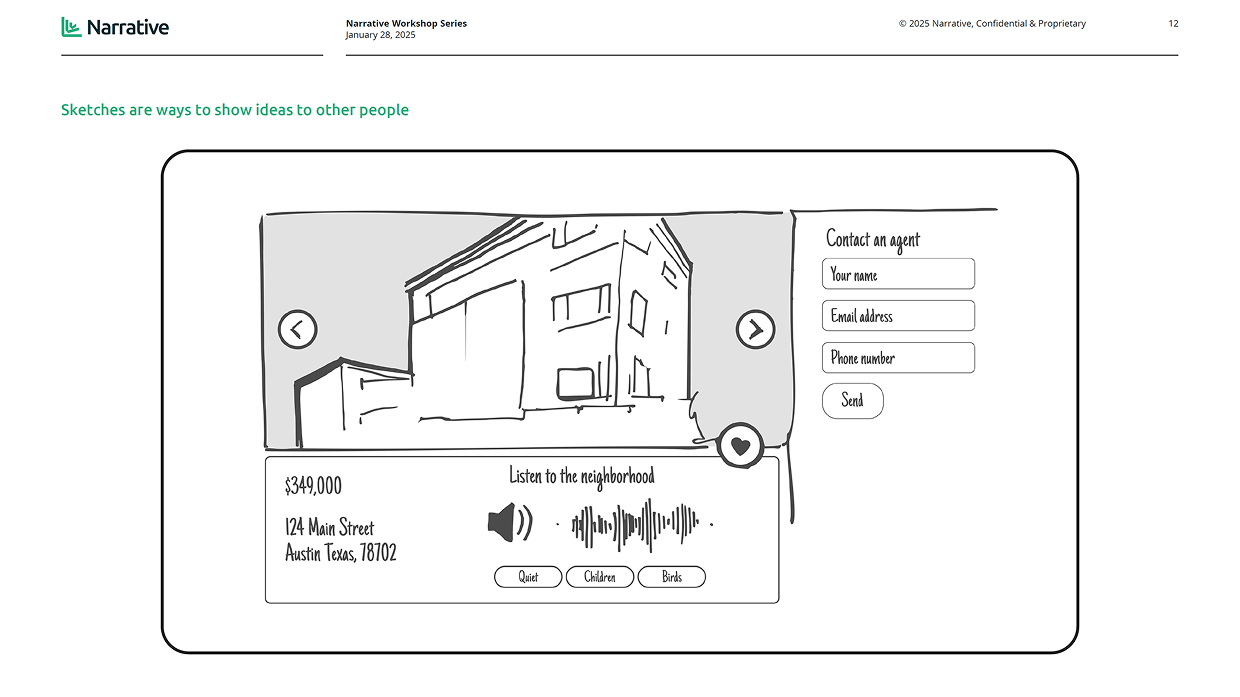

The second problem students encounter is that the actual fidelity of their design is poor, too. It doesn’t look the way they want, making it hard for someone else (like a user, or their classmates) to understand their intention. This means that, when people see the design, they can’t comprehend what’s happening at a tactical execution level—they won’t understand that a button is supposed to be clickable, or that a draggable slider can be dragged, or that lorum-ipsem style text is actually instructional, and so-on. This is equally frustrating to the student because they know their own intent and have failed to communicate it to another person.

Test the design and hold a critique

The student is aware of both of these problems—that their work product is confusing, and that the execution of the work product is impacting comprehension. But they usually only know that these problems exist at a general level. They know there is a problem, but they haven’t yet learned to pinpoint what the problems are.

This is clarified through user testing. Students leverage the think aloud style I’ve already discussed: they present their work to people (I instruct them to test with people they’ve never met before, not their friends or classmates), and ask them to use their rough sketched prototypes to accomplish specific goals. For example, when redesigning the banking application, a user may be prompted to “Use these wireframes to deposit a check, and talk out loud as you accomplish the task.” Students capture what the user says, but they don’t intervene to help—they let the user continue on the task even when they run into problems or can’t understand what to do next.

This form of testing is critical to moving a design forward, as it produces further constraints for future iterations and highlights areas that need improvement. Testing helps students see that what they made is confusing, and most importantly, it highlights that confusion in specifics. It’s not that the interface as a whole is generally confusing—it’s that this particular button is hard to see, or this specific text is full of jargon, or this navigation element is hard to understand. The specificity is actionable because a student now knows what to fix. The problem feel more manageable. Instead of struggling in the face of “fixing the whole design”, the student can hone in on specific changes to make (to a button, or text, or a navigation element).

Testing also reinforces to the student that their work is always malleable and that there isn’t a “done” state for design work. Usability testing will always highlight problems, and testing each iteration produces actionable redesign recommendations. Students slowly begin to realize that the goal of design is not to “solve the problem all at once” but to “improve their work over time.”

In addition to testing with users, students also test with their classmates through the methods of structured critique that we’ve already discussed. They pin their work on the wall, step back, and the class tries to understand what they did and why they did it. Through the critique, students identify problems, articulate solutions, and most importantly, sketch those solutions directly on each other’s work.

Refine the design

The output of user testing and critique is detailed and actionable. These methods give the student a sense for what they need to change in future iterations. After testing and critique, students synthesize the findings from these methods in order to produce another iteration.

This challenges them to identify the most important feedback and to make sense of it. Feedback is often ill-structured and incomplete, and they need to translate it into something actionable. They need to be selective and establish their own criteria for what to redesign—they need to prioritize only the feedback that they see as productive, and turn those comments and suggestions into changes.

Students work through seven full iterations (create, test & critique, refine), producing a new iteration each week. This fast cadence helps to instill and reinforce several key behaviors.

- Students learn to work quickly. They can’t labor over a single design, because there’s simply no time. The schedule doesn’t let them second guess their own decisions. They need to make decisions without “all of the data” and move forward, leveraging external feedback as the primary vehicle to help them assess their decisions.

- Students realize that iteration always leads to improvement. The act of making something is not simply to communicate it to someone else. It’s actually a form of learning. Each iteration changes what the designer knows, and they become smarter about the design as they make it. More iterations lead to more knowledge production.

- Students learn the value of critique and user testing in providing new provocation for design. They learn to ask for critique, rather than avoid it, because they see how external input helps them look at old problems in new ways. Usability testing reinforces that “the user is not like me” and helps them make their solutions more usable and useful.

- Students build confidence. Students start out ashamed of what they make, because it doesn’t make sense, doesn’t look like how they want it to look, and feels incomplete and sloppy. Over the duration of the course, this changes. Their artifacts look more realistic, and they better capture intent: they seem more cohesive and more professional. Students feel more competent, and feel more confident in their decisions.